|

Pulling Up by the Causal Bootstraps: Can Deep Models Overcome Confounding Bias?

Abstract

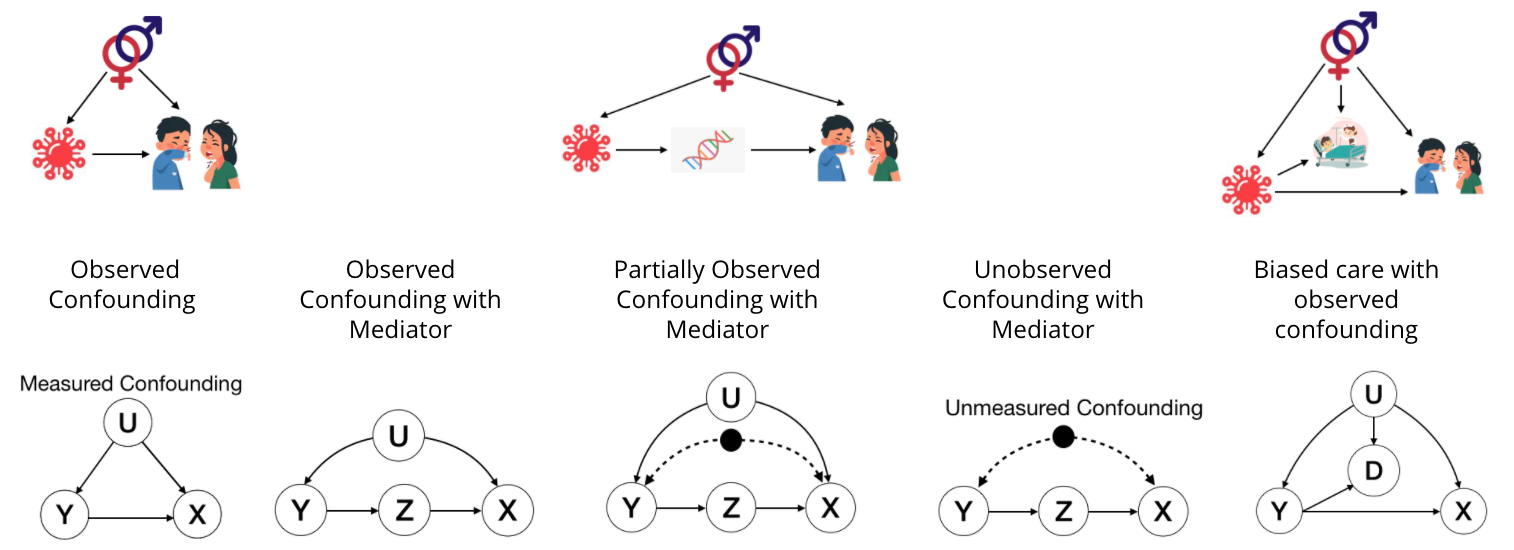

Deep learning models achieve state of the art performance on many supervised learning tasks. However, it is often difficult to evaluate if models rely on shortcut biases for predictive performance. We study in de-tail five practically relevant confounded data generation scenarios with both known and un-known confoundings, motivated by healthcare. Under these settings, we systematically investigate the effect of confounding bias on deeplearning model performance to generalise to different target environments using synthetic and 3 semi-synthetic data-sets. We discover that medical imaging tasks are more sensitive to biases. We investigate commonly used techniques like data augmentation, modeling all features jointly and causality based boot-strapping, providing insights into the benefits of a causal lens in addressing confounding bias for ML tasks. We further demonstrate how causal bootstrapping helps learn unbiased models, providing significant benefits on biased medical imaging tasks. This systematic investigation underlines the importance of accounting for the underlying data-generating mechanisms and a causal framework to develop methods robust to confounding biases